Structural pre-training improves physical accuracy of antibody structure prediction using deep learning

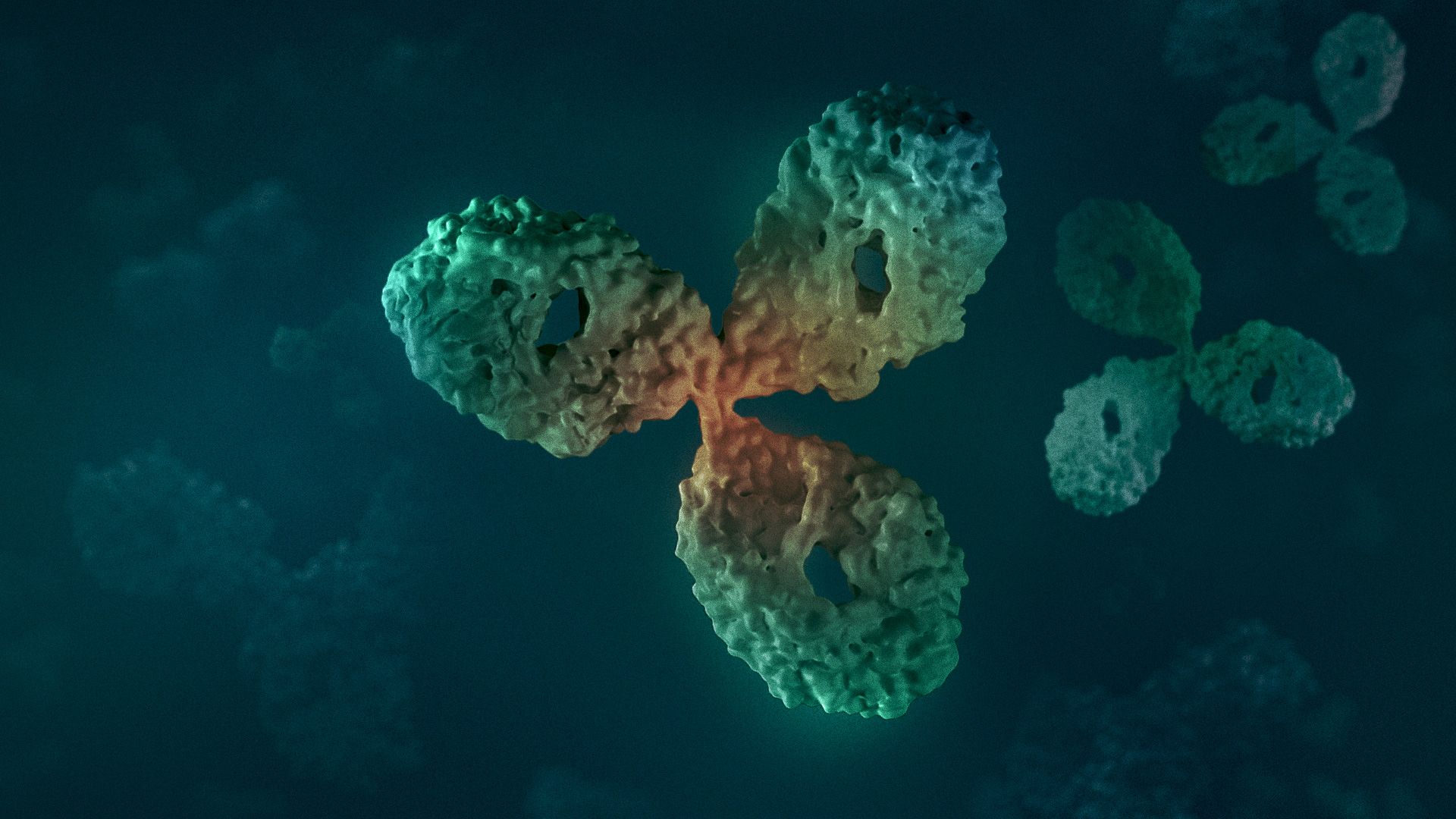

Executive summary: The protein folding problem recently obtained a practical solution in AlphaFold2 and advances in deep learning. Some protein classes - for example, antibodies - are structurally unique, so the general solution still needs to be improved - especially around the prediction of the CDR-H3 loop, which is an instrumental part of an antibody in its antigen recognition abilities.

In this publication, we hypothesised that pre-training the network on a large, augmented set of models with correct physical geometries, rather than a small set of real antibody X-ray structures, would allow the network to learn better bond geometries. We show that fine-tuning such a pre-trained network on a task of shape prediction on real X-ray structures improves the number of correct peptide bond distances. We further demonstrate that pre-training allows the network to produce physically plausible shapes on an artificial set of CDR-H3s, showing the ability to generalise to the vast antibody sequence space.

We hope that our strategy will benefit the development of deep-learning antibody models that rapidly generate physically plausible geometries without the burden of time-consuming energy minimization.

Full publication:

Jarosław Kończak, Bartosz Janusz, Jakub Młokosiewicz, Tadeusz Satława, Sonia Wróbel, Paweł Dudzic, Konrad Krawczyk. Structural pre-training improves physical accuracy of antibody structure prediction using deep learning.

doi: https://doi.org/10.1101/2022.12.06.519288

Introduction

The publication addresses the problem of improving antibody structure prediction by generating more physically plausible structures directly from the network.

With antibody diversity estimated at 1018 unique molecules, the publicly available 6,500 redundant experimentally resolved antibody structures is a relatively small sample. Most of the models that tackled antibody structure prediction were trained on such a small publicly available dataset of antibody structures, and, to the best of our knowledge, only IgFold employed AlphaFold to augment its input dataset. Such a small dataset of antibodies is clearly powerful enough to teach the broad features of an immunoglobulin shape but needs to make the structures physically plausible out-of-the-network.

To address this issue, one may augment the input dataset to expose the network to more correct bond geometries. We demonstrate that pre-training a network on a large, augmented dataset of antibody model structures allows it to learn such physical features.

Key takeaways

- We demonstrated that even simple models can learn better quality peptide bonds if given the benefit of pre-training on a large augmented set of refined antibody structures.

- During a phage-display or animal immunization campaign, it is not uncommon to produce a number of sequences in the region of 105-106 or even 1011 (Alfaleh et al. 2020). Current methods such as IgFold, AbodyBuilder2, and ESMFold produce results of comparable quality in a matter of seconds, which is an order of magnitude improvement versus the pioneer, AlphaFold2. However, even assuming an optimistic scenario that with refinement, such methods would take only one second, 106 sequences would still take approximately 11 days on a powerful GPU.

- A relatively tiny snapshot of this space in the form of Observed Antibody Space, currently holds 2,426,036,223 unique antibody sequences. Assuming an optimistic running time of 1s per model, covering all of these sequences on a powerful GPU would take approximately 673,898 hours or around 75 years.

- Producing physically accurate predictions without the need for energy refinement can reduce the running time and thus make large-scale annotation of the antibody space within reach.

- As we demonstrated with the artificial CDR-H3 exercise, many networks show unstable behaviour (non-physical peptide bond lengths) when predicting novel sequences, suggesting limited generalizability. Developing plausible novel antibodies is not only the domain of structure prediction but also generative modelling.

- While our approach of introducing an augmented dataset for model pre-training improved model stability in CDR-H3 prediction, it might also be applicable to producing novel physically plausible structures using generative modelling regimes.